Create Your Perfect Robots.txt File in Seconds—No Coding Required

Take complete control of how search engines crawl your website. Our intuitive robots.txt generator helps you create, customize, and optimize your crawler directives with just a few clicks. Whether you need to protect admin pages, manage your crawl budget, or block specific bots, our tool makes it effortless—even if you’ve never worked with robots.txt before.

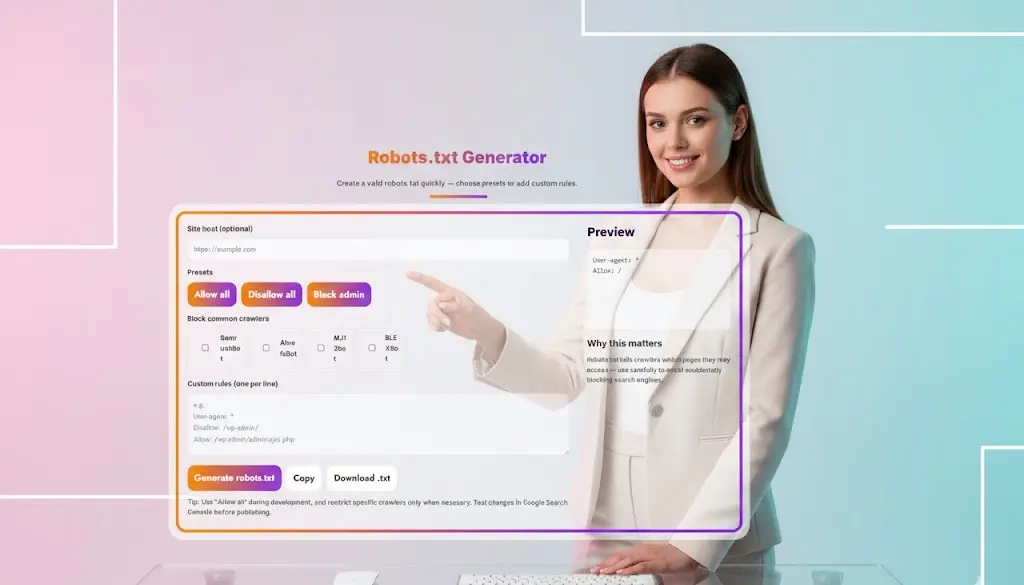

Robots.txt Generator

Create a valid robots.txt quickly — choose presets or add custom rules.

Preview

What is a Robots.txt File and Why Does It Matter?

A robots.txt file is a critical component of your website’s SEO infrastructure that tells search engine crawlers which pages they can and cannot access on your site. This simple text file, placed in your website’s root directory, acts as a communication protocol between your website and web crawlers from Google, Bing, and other search engines.

Using a robots.txt generator simplifies the process of creating this essential file, ensuring you don’t accidentally block important pages or leave sensitive areas exposed to unwanted crawlers. Whether you’re managing a small blog or a large enterprise website, understanding how to properly configure your robots.txt file is fundamental to controlling your site’s visibility in search results.

Understanding the Importance of Robots.txt for SEO

The robots.txt file plays a crucial role in your SEO strategy by directing crawler traffic efficiently. When search engine bots visit your website, they first look for the robots.txt file to understand which sections they’re permitted to crawl. This helps you:

- Conserve Crawl Budget: Large websites have a limited crawl budget—the number of pages search engines will crawl in a given timeframe. By blocking unnecessary pages, you ensure crawlers focus on your most important content.

- Protect Sensitive Areas: Keep administrative pages, staging environments, and internal search results away from search engine indexes.

- Prevent Duplicate Content Issues: Block search engines from crawling parameter-based URLs or filtered pages that could create duplicate content problems.

- Improve Site Performance: Reduce server load by preventing aggressive crawlers from overwhelming your resources.

How to Use Our Robots.txt Generator Tool

Our free robots.txt generator makes creating a valid robots.txt file straightforward, even if you have no technical experience. Here’s how to use the tool effectively:

Step 1: Enter Your Site Host (Optional)

While optional, entering your website’s URL helps contextualize the rules you’re creating. This is particularly useful when working with multiple sites or when you want to keep track of configurations.

Step 2: Choose from Preset Options

Our robots.txt generator offers three convenient presets:

- Allow All: Permits all crawlers to access your entire website. Ideal for new websites that want maximum visibility or sites with no content restrictions.

- Disallow All: Blocks all crawlers from accessing your entire site. Useful for development sites, staging environments, or sites undergoing major restructuring.

- Block Admin: Prevents crawlers from accessing administrative areas like /wp-admin/, /admin/, and similar directories. This is the most common configuration for production websites.

Step 3: Block Specific Common Crawlers

Sometimes you need granular control over which bots can access your site. Our tool lets you selectively block common crawlers:

- SemrushBot: Blocks the SEMrush crawler used by the popular SEO tool

- AhrefsBot: Prevents Ahrefs’ bot from crawling your site

- MJ2Bot: Blocks the Majestic SEO crawler

- BLEXBot: Stops BLEXBot, which indexes web pages for various purposes

Blocking these crawlers doesn’t affect your search engine rankings, as they’re third-party SEO tools rather than major search engines.

Step 4: Add Custom Rules

For advanced users, the custom rules section allows you to create specific directives. Each rule should be on a separate line and follow standard robots.txt syntax:

User-agent: *

Disallow: /private/

Allow: /public/

Disallow: /admin/

Allow: /admin/public-page.htmlStep 5: Preview and Generate

The preview section shows you exactly how your robots.txt file will appear. Once satisfied, click “Generate robots.txt” to create the file, then either copy the content or download it as a .txt file.

robots.txt file with our simple, powerful tool.Best Practices for Robots.txt Configuration

When using a robots.txt maker, follow these best practices to avoid common pitfalls:

1. Use “Allow All” During Development

While developing and testing your site, use the allow all preset to ensure search engines can discover your content once it goes live. Many developers forget to update restrictive robots.txt files after launch, inadvertently hiding their entire site from search engines.

2. Test Changes in Google Search Console

Before publishing changes to your robots.txt file, always test them using Google Search Console’s robots.txt tester. This tool shows you exactly which URLs will be blocked and helps identify unintended consequences.

3. Don’t Use Robots.txt for Security

The robots.txt file is publicly accessible and doesn’t provide security. Never rely on it to hide sensitive information—use proper authentication methods like password protection or access controls instead.

4. Be Specific with Disallow Rules

When blocking crawlers from specific sections, be as precise as possible. Overly broad disallow rules can accidentally block important pages from being indexed.

5. Allow Access to CSS and JavaScript

Modern search engines need to render your pages to understand them fully. Ensure your robots.txt doesn’t block CSS, JavaScript, or image files necessary for rendering.

Common Robots.txt Use Cases

E-commerce Websites

Online stores often need to block filtered product pages, search result pages, and cart URLs to prevent duplicate content issues. A typical e-commerce robots.txt might include:

User-agent: *

Disallow: /cart/

Disallow: /checkout/

Disallow: /search?

Disallow: /*?sort=

Allow: /WordPress Sites

WordPress users commonly block administrative areas and certain system directories:

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

Disallow: /wp-includes/News and Publishing Sites

News sites might want to control access to comment sections or allow certain user agents priority access during breaking news:

User-agent: Googlebot-News

Allow: /

Crawl-delay: 0

User-agent: *

Disallow: /comments/Understanding Crawler Behavior

Different web crawlers behave differently when encountering robots.txt files. The major search engines (Google, Bing, Yahoo) respect robots.txt directives, but some aggressive crawlers ignore them entirely. Using our robots.txt generator to create proper rules is just the first step—monitoring crawler behavior through server logs helps identify problematic bots that require additional blocking measures at the server level.

Advanced Robots.txt Directives

Beyond basic Allow and Disallow rules, robots.txt supports several advanced directives:

Crawl-Delay

Some crawlers respect the crawl-delay directive, which specifies how many seconds to wait between requests:

User-agent: *

Crawl-delay: 10Sitemap Declaration

You can include your XML sitemap location in robots.txt to help crawlers discover it:

Sitemap: https://example.com/sitemap.xmlWildcard Characters

Use asterisks (*) to match multiple characters and dollar signs ($) to match the end of URLs:

User-agent: *

Disallow: /*.pdf$

Disallow: /*?sessionid=Mobile and International Considerations

When optimizing robots.txt for international or mobile sites, remember that:

- Separate mobile URLs (m.example.com) need their own robots.txt file

- International subdomains each require individual robots.txt configuration

- Mobile user agents follow the same rules as desktop unless specifically targeted

Monitoring and Maintaining Your Robots.txt

Creating a robots.txt file isn’t a one-time task. Regular maintenance ensures optimal crawler management:

- Review Monthly: Check if new sections of your site need blocking

- Monitor Crawl Stats: Use Google Search Console to track how crawlers interact with your site

- Update After Site Changes: Major redesigns or new features may require robots.txt updates

- Validate Syntax: Use validation tools to ensure your file follows proper syntax

robots.txt file in seconds. Control crawling, save crawl budget, and boost your rankings effortlessly.Frequently Asked Questions About Robots.txt Generator

Q: What is a robots.txt generator and why should I use one?

A: A robots.txt generator is an online tool that helps you create a properly formatted robots.txt file without needing to write code manually. You should use one because it prevents syntax errors that could accidentally block search engines from your entire site, provides presets for common configurations, and ensures your file follows best practices. Manual creation is prone to mistakes that can seriously harm your SEO.

Q: Where should I place my robots.txt file on my website?

A: Your robots.txt file must be placed in the root directory of your website, accessible at yourdomain.com/robots.txt. It cannot be placed in a subdirectory or subfolder. Search engine crawlers always look for it at the root level first, and they won’t check other locations. Each subdomain needs its own robots.txt file if you want to control crawling separately.

Q: Can robots.txt improve my search engine rankings?

A: Robots.txt doesn’t directly improve rankings, but it helps search engines crawl your site more efficiently by directing them to your most important content. By blocking low-value pages (like admin areas, duplicate content, or filtered pages), you ensure crawlers spend their time indexing pages that actually matter for your SEO. This indirect benefit can lead to better overall search visibility.

Q: What’s the difference between “Disallow” and “Noindex”?

A: “Disallow” in robots.txt tells crawlers not to visit a page, but the page can still appear in search results if other sites link to it. “Noindex” (implemented via meta tags or HTTP headers) tells crawlers they can visit the page, but shouldn’t include it in search results. For complete blocking from search results, use noindex rather than robots.txt disallow.

Q: Should I block search engine crawlers from my staging site?

A: Absolutely yes. Use the “Disallow all” preset in our robots.txt generator for development and staging sites. Add this to your robots.txt file to prevent any indexing:

User-agent: *

Disallow: /Additionally, use password protection or IP restrictions for extra security, as robots.txt alone isn’t a security measure.

Q: Can I use robots.txt to block specific pages or files?

A: Yes, you can block specific URLs, directories, or file types using custom rules. For example:

User-agent: *

Disallow: /private-page.html

Disallow: /*.pdf$

Disallow: /confidential/Use our robots.txt generator’s custom rules section to add these specific directives.

Q: How often should I update my robots.txt file?

A: Update your robots.txt whenever you make significant changes to your site structure, add new sections that shouldn’t be crawled, or notice unusual crawler behavior in your server logs. At minimum, review it quarterly to ensure it still aligns with your SEO strategy. Our robots.txt generator makes updates quick and easy.

Q: Will blocking crawlers with robots.txt save server resources?

A: Yes, blocking unnecessary crawlers reduces server load and bandwidth usage. If you’re experiencing resource issues from aggressive third-party crawlers (like SEO tools), use our tool to selectively block SemrushBot, AhrefsBot, or other non-essential crawlers while allowing major search engines access.

Q: Can I have multiple robots.txt files on one website?

A: No, you can only have one robots.txt file per host/subdomain. However, you can include different rules for different user agents within a single file. If you have multiple subdomains (blog.example.com, shop.example.com), each needs its own robots.txt file at its root.

Q: What happens if I don’t have a robots.txt file?

A: If no robots.txt file exists, search engines assume they can crawl everything on your site. This isn’t necessarily bad for simple websites, but most sites benefit from at least basic crawler control to block admin areas and manage crawl budget efficiently.

Q: How do I test if my robots.txt file is working correctly?

A: Use Google Search Console’s robots.txt Tester tool to verify your file blocks the intended URLs. Simply enter a URL and select a user agent to see if it’s allowed or blocked. This helps catch errors before they affect your search visibility. Always test before publishing changes generated by any robots.txt generator.

Q: Can robots.txt completely hide my website from search engines?

A: Using “Disallow: /” blocks crawling, but pages can still appear in search results if they have external links pointing to them. For complete removal from search results, you need to combine robots.txt blocking with noindex directives and, if already indexed, use Google Search Console’s removal tools.

Get Started

A properly configured robots.txt file is essential for effective SEO management and website optimization. Our free robots.txt generator tool simplifies the creation process, offering presets, crawler-specific blocking, and custom rule options to suit any website’s needs. By following best practices and regularly maintaining your robots.txt configuration, you ensure search engines efficiently crawl your most valuable content while protecting areas that should remain private.

Start using our robots.txt maker today to take control of how search engines interact with your website and optimize your crawl budget for better search visibility.

Related Tools and Directory

- Income Tax Calculator

- Tools Directory Overview

- Free Online tools Hub

- Advance Tax Interest Calculator

- GST Calculator

- HRA Exemption Calculator

- TDS Deduction Estimator — Salary (Monthly Estimate)

- Income Tax Slab Comparison

- Gratuity Calculator

- EPF Contribution Calculator

- Simple EMI Calculator

- Advance Term Loan EMI Calculator

- Car Loan EMI Calculator

- Personal Loan EMI Calculator

- Home Loan EMi Calculator

- SIP Calculator

- SIP Goal Calculator

- CAGR Calculator

- XIRR Calculator

- SWP Calculator

- STP Calculator

- Free Lumpsum Investment Calculator: Maximize the Future Value

- CSV to JSON Converter

- QR Code Generator

- CSV to Excel Converter

- Base64 Encoder/Decoder

- Regex Tester

- JSON Formatter & Validator

- UUID Generator

- Strong Password Generator

- Lorem Ipsum Generator

- URL Encoder & Decoder

- HTML escape-unescaped tools

- JPG to PDF Converter

- PDF to JPG Converter

- PDF Compressor

- PDF Merge

- Case Converter

- Break-Even Calculator

- ROI Calculator

- Free Profit Margin Calculator – Calculate Profit Margins Instantly

- Startup Runway & Burn Rate Calculator – Calculate Cash Runway Free

- Free Working Capital Calculator | Free Tool- ToolSuite.in

- Vendor Payment Terms Calculator | APR & Discount Analyzer Free

- Simple Cap Table Builder | Free Equity & Ownership Calculator

- AI Email Writer – Free Online Email Generator for Emails

- AI Email Rewriter – Free Smart Email Assistant-Toolsuite

- Meeting Notes Summarizer – Free Tool to Summarize Meetings

- Free AI Message Polisher – Rewrite Messages in Formal, Friendly

- Daily Work Summary Generator | Free EOD Report & Manager Update Tool

- Document Bullet Extractor Free | Extract Key Points From Text

- Email Subject Line Generator – Create Subject Lines Free

- Meta Title & Description Generator – Free SEO Tool | Toolsuite

- Explore more tools for Finance & Tax and SEO on TaxBizmantra.com & CAMSROY.COM